Abstract

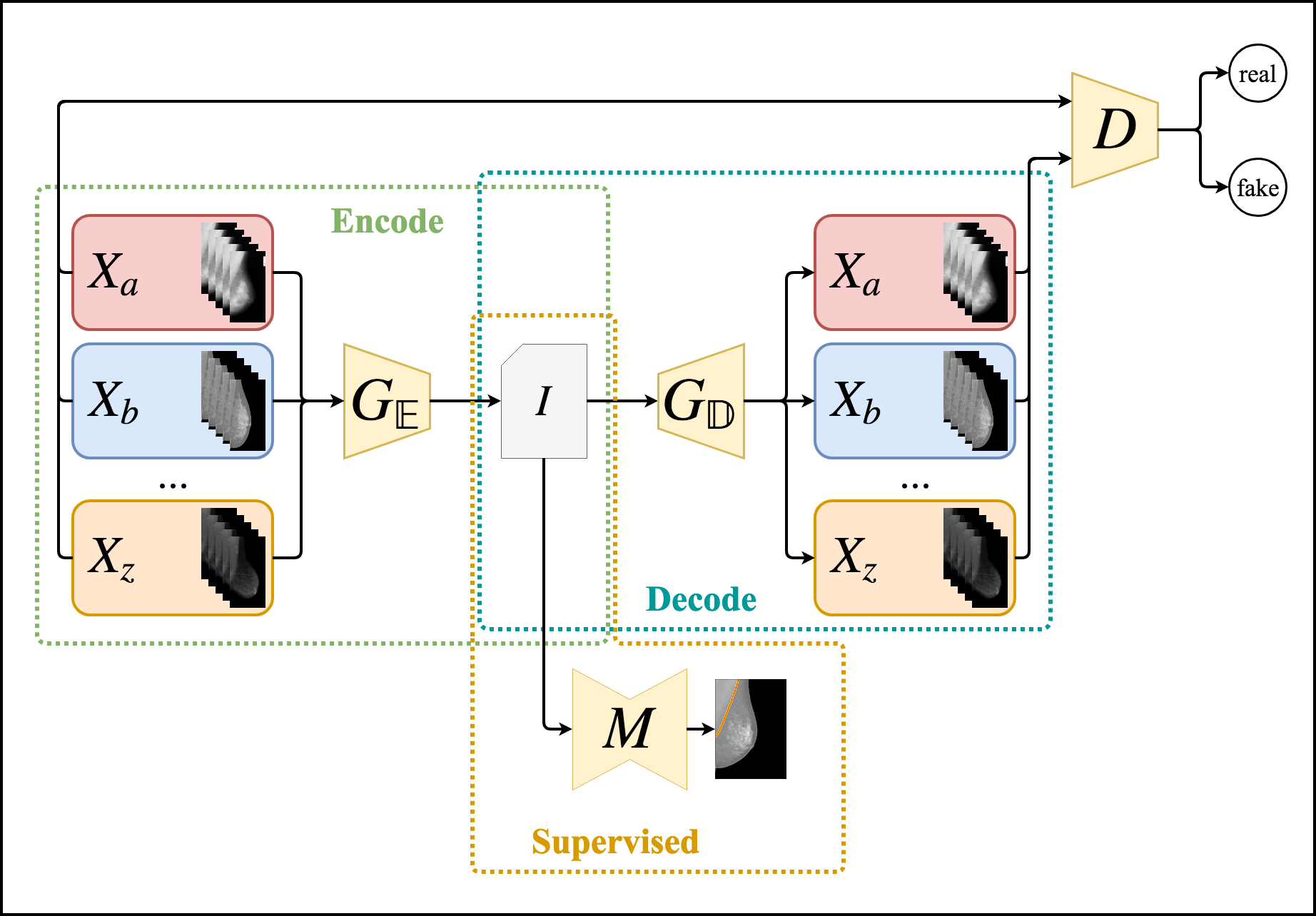

Distinct digitization techniques for biomedical images yield different visual patterns in samples from many radiological exams. These differences may hamper the use of Machine Learning data-driven approaches for inference over these images, such as Deep Learning methods. Another important difficulty in this field is the lack of labeled data, even though in many cases there is an abundance of unlabeled data available. Therefore an important step in improving the generalization capabilities of these methods is to perform Unsupervised and Semi-Supervised Domain Adaptation between different datasets of biomedical images. In order to tackle this problem, in this work we propose an Unsupervised and Semi-Supervised Domain Adaptation method for dense labeling tasks in biomedical images using Generative Adversarial Networks for Unsupervised Image-to-Image Translation. We merge these unsupervised Deep Neural Networks with with well-known supervised deep semantic segmentation architectures in order to create a semi-supervised method capable of learning from both unlabeled and labeled data, whenever labeling is available. We compare our method using several domains, datasets, segmentation tasks and traditional baselines in the Transfer Learning literature, such as unsupervised feature space distance-based methods and using pretrained models both with and without fine-tuning. We perform both quantitative and qualitative analysis of the proposed method and baselines in the distinct scenarios considered in our experimental evaluation. The proposed method shows consistently and significantly better results than the baselines in scarce labeled data scenarios, achieving Jaccard values greater than 0.9 in most tasks. Completely Unsupervised Domain Adaptation results were observed to be close to the Fully Supervised Domain Adaptation used in the traditional procedure of fine-tuning pretrained Deep Neural Networks.

Official Implementation

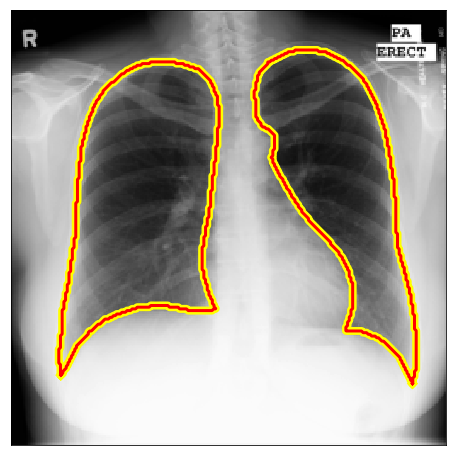

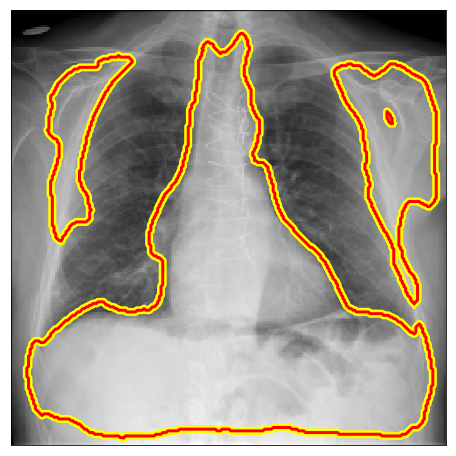

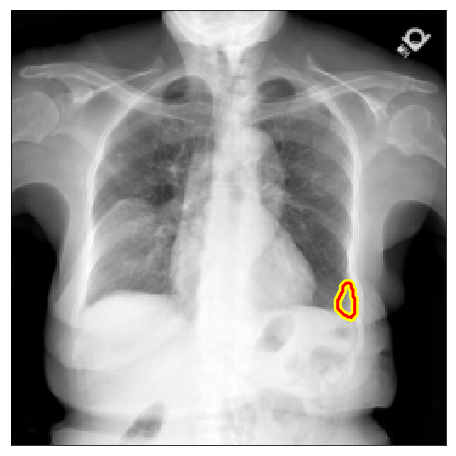

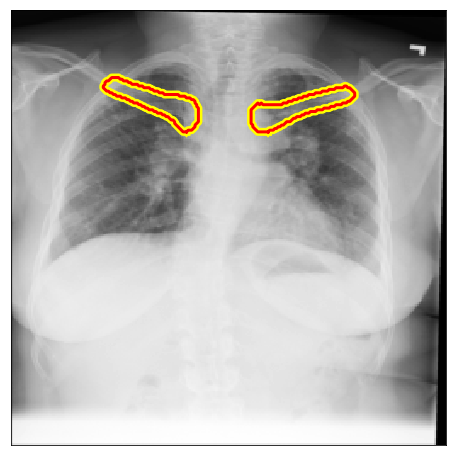

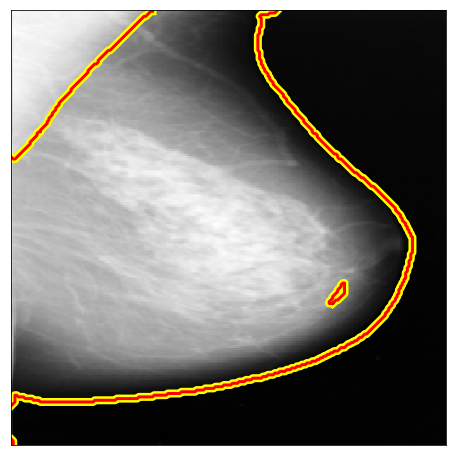

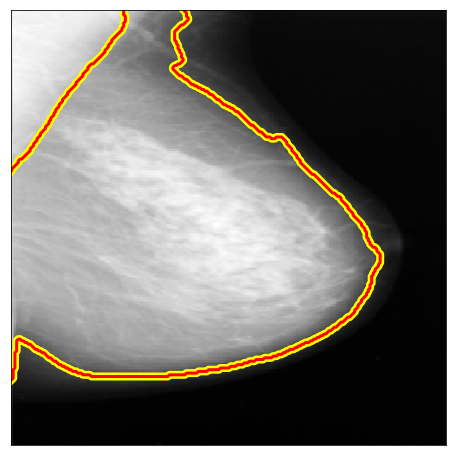

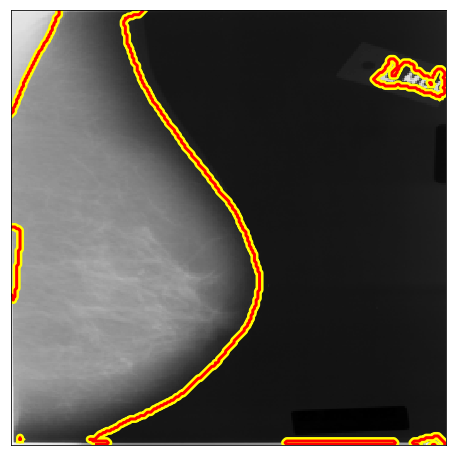

Qualitative Assessment

Below there are some segmentation predictions from our method in several distinct experiments, datasets and image domains. All images, labels and ground truths from our main experiments can be seen in our Google Drive folder.

| Lungs |

| Dataset | Image | Ground Truths | Pretrained U-Net | D2D | CoDAGAN |

| JSRT |  |

|

|

|

|

| OpenIST |  |

|

|

|

|

| Shenzhen |  |

|

|

|

|

| Montgomery |  |

|

|

|

|

| Chest X-Ray 8 |  |

|

|

|

|

| PadChest |  |

|

|

|

|

| NLMCXR |  |

|

|

|

|

| OCT CXR |  |

|

|

|

| Heart |

| Dataset | Image | Ground Truths | Pretrained U-Net | D2D | CoDAGAN |

| JSRT |  |

|

|

|

|

| OpenIST |  |

|

|

|

|

| Shenzhen |  |

|

|

|

|

| Montgomery |  |

|

|

|

|

| Chest X-Ray 8 |  |

|

|

|

|

| PadChest |  |

|

|

|

|

| NLMCXR |  |

|

|

|

| Clavicles |

| Dataset | Image | Ground Truths | Pretrained U-Net | D2D | CoDAGAN |

| JSRT |  |

|

|

|

|

| OpenIST |  |

|

|

|

|

| Shenzhen |  |

|

|

|

|

| Montgomery |  |

|

|

|

|

| Chest X-Ray 8 |  |

|

|

|

|

| PadChest |  |

|

|

|

|

| NLMCXR |  |

|

|

|

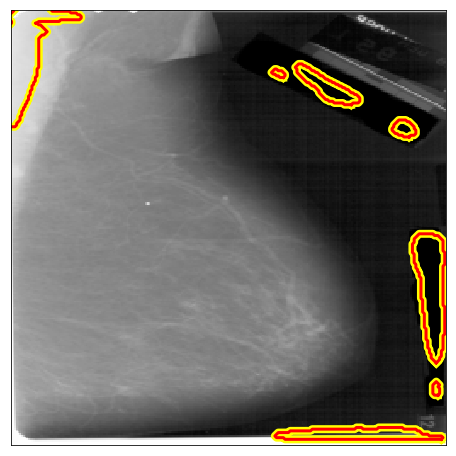

| Pectoral Muscle |

| Dataset | Image | Ground Truths | Pretrained U-Net | D2D | CoDAGAN |

| INbreast |  |

|

|

|

|

| MIAS |  |

|

|

|

|

| DDSM B/C |  |

|

|

|

|

| DDSM A |  |

|

|

|

|

| BCDR |  |

|

|

|

|

| LAPIMO |  |

|

|

|

| Breast Region |

| Dataset | Image | Ground Truths | Pretrained U-Net | D2D | CoDAGAN |

| INbreast |  |

|

|

|

|

| MIAS |  |

|

|

|

|

| DDSM B/C |  |

|

|

|

|

| DDSM A |  |

|

|

|

|

| BCDR |  |

|

|

|

|

| LAPIMO |  |

|

|

|

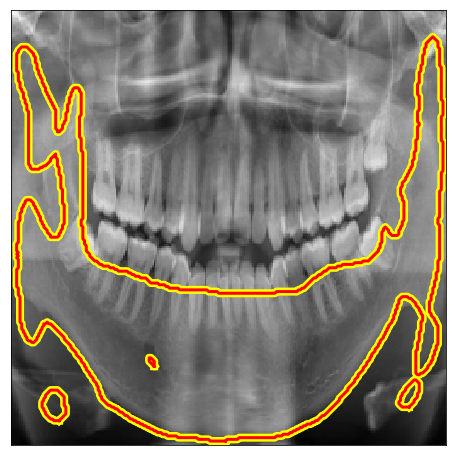

| Teeth |

| Dataset | Image | Ground Truths | Pretrained U-Net | D2D | CoDAGAN |

| IvisionLab |  |

|

|

|

|

| IvisionLab |  |

|

|

|

|

| Panoramic X-ray |  |

|

|

|

|

| Panoramic X-ray |  |

|

|

|

| Mandible |

| Dataset | Image | Ground Truths | Pretrained U-Net | D2D | CoDAGAN |

| Panoramic X-ray |  |

|

|

|

|

| Panoramic X-ray |  |

|

|

|

|

| IvisionLab |  |

|

|

|

|

| IvisionLab |  |

|

|

|